The FBI warns that sextortionists have stepped up and are creating sexually explicit deepfakes using AI and social media photos of victims.

The term sextortion, derived from the words sex and extortion, is used to refer to such activity. This tactic involves intimidating users: often, scammers send spam in which they seek to convince victims that they have some compromising images or videos, and then demand a ransom.Let me remind you that we also wrote that Sextortion ransomware writes letters in foreign languages to bypass filters. You might also be interested in the article: Is Pornhub Safe? Browse Pornhub Without Getting Hacked.

The media also wrote that Scammers claim to have hacked a porn site XVideos.com and extort $969 from users.

In many cases, such extortion is based on empty threats, as attackers only pretend that they have access to explicit content. However, now the FBI has warned that “sex extortionists” are using a new tactic. They take public photos and videos of potential victims from social networks and then use these images to create deepfakes, generating fake but very convincing looking sexual content.

Although the images and videos are fake, they are well suited for blackmail because they look real, and sending such material to the victim’s family or colleagues can cause serious damage to the victim and its reputation.

Experts at the FBI’s Internet Fraud Complaint Center (IC3) write that, as of April 2023, they have documented a spike in attacks using such fake images and videos based on content from victims’ social media or videos taken during video chats.

Law enforcement officers also write that sometimes scammers do not immediately turn to extortion, but place deepfakes on porn sites, of course, without the knowledge and consent of the victims. In some cases, after that, the extortionists use such photos and videos to increase pressure on the victim, and demand money for the removal of materials from tailor sites. The FBI emphasizes that this activity of intruders, unfortunately, has already affected minors.

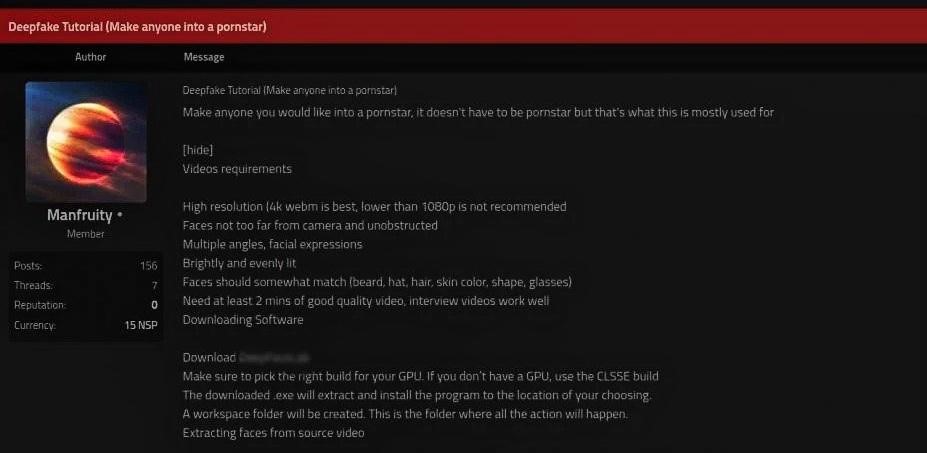

Experts note that the speed with which AI tools for content creation become available to a wide audience creates a hostile environment for all Internet users. For example, there are several projects available for free on GitHub that allow you to create realistic-looking videos based on the image of the victim’s face alone, without any additional training and datasets.

Many of these tools have built-in protection against misuse, but similar solutions are sold on the dark web that do not have such restrictions.

An advertisement for an AI porn creation tool

The FBI recommends that parents monitor their children’s online activity and talk to them more often about the risks associated with sharing personal files. Adults who post images and videos online are advised to limit access to them to friends to reduce potential risks. At the same time, it is recommended to always hide or mask by blurring the faces of children in photos and videos.